The WordPress community has been discussing a proposal to force disable FLoC on all websites. Unfortunately, it doesn’t look like it will lead anywhere.

Unlike the WordPress leadership, the EFF have strongly rejected FLoC due its privacy risks, and individualised profiling. The Brave browsers position is that FLoC “materially harms user privacy”, and the Vivaldi browser accuses FLoC of “unwittingly give away [user] privacy for the financial gain of Google”. DuckDuckGo went so far as to create a FLoC blocking browser extension.

Google seems to be having a hard time explaining that FLoC really isn’t as bad as it seems. When asked about the implications of FLoC in a Make WordPress Core chat on Slack, all we heard from Google representatives was political hedging and links to websites that didn’t answer our questions. And here’s a Twitter thread with the Google Chrome Developer Relations Lead that’s a microcosm of that experience.

Please, let’s put away the “FLoC tries to make advertising more private” argument. No. FLoC makes fingerprinting easier than ever, not harder – your cohort ID is just another data point to help pick you out of a crowd.

Worse – FLoC reveals interest data to websites who already have your personally identifiable information (wherever you’ve signed up with your email address, for example). The inevitable outcome is that your interest data will at some point be made public. Imagine a world where dark web markets sell databases of email addresses linked to their interests and the URLs they’ve visited!

The issues with FLoC extend far beyond privacy. You think Twitter bubbles are a problem now? FLoC enables an entire web that’s bubbled specifically for you. News sites could show alternate versions of articles based on your cohort. Community groups might limit new memberships based on your algorithmically defined interests. Ecommerce stores will change their prices based on the websites you’ve visited recently.

If there’s one thing we’ve learned over the past decade, it’s that perverse incentives have a habit of leading to perverse outcomes. Nobody wants to trust Google, or any other company with a business model rooted in manipulating you with personalised advertisements. Nobody is falling for the “Interest-based Targeting” parlance. It’s just a poor attempt at rebranding “Personalised Ads” after years of bad press.

John Gruber puts it perfectly:

Just because there is now a multi-billion-dollar industry based on the abject betrayal of our privacy doesn’t mean the sociopaths who built it have any right whatsoever to continue getting away with it. They talk in circles but their argument boils down to entitlement: they think our privacy is theirs for the taking because they’ve been getting away with taking it without our knowledge, and it is valuable.

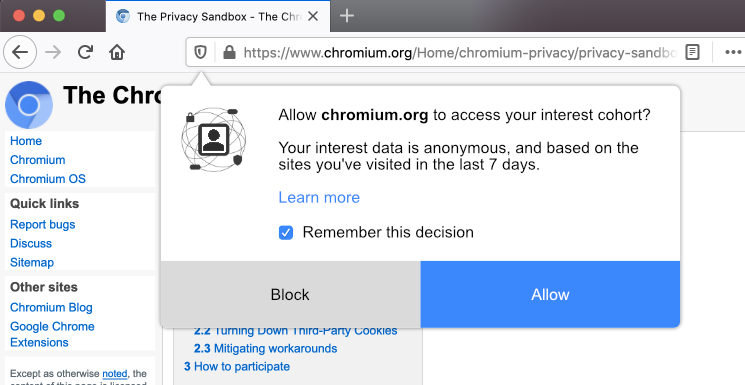

Here’s an idea, Google. Stop planning the future of the web on Zoom calls with your advertising tech mates. There’s a simpler solution. Give browser users the ability to choose exactly who they want to share their data with, and for what purposes, rather than going behind our backs to be neatly arrange us into cohort clusters for advertisers to conveniently target.

Information used for tracking must belong to the users whose behavior and interests are being tracked, not to Google or advertisers, no matter how anonymous the data is made to be. Data ownership means the right to say who gets what data and when, as well as understanding what it’s going to be used for and how long it will be held. That basic right is one which Google, and its ad-tech buddies, aren’t willing to grant.

Algorithmic profiles powering personalised ads might be good for business, but they’re bad for the web.